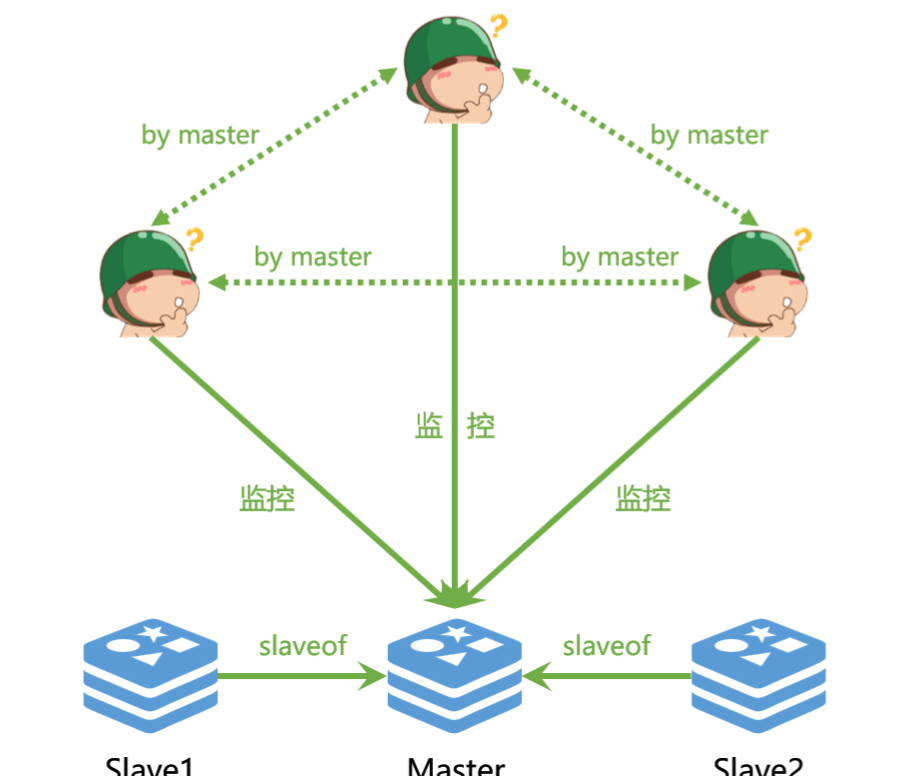

redis sentinel为redis提供高可用性,这意味着使用sentinel可以创建Redis `HA(High Avaliable)`部署,该部署可以在无需人工干预的情况下抵抗某些类型的故障

Redis Sentinel提供的主要功能:

- 当主节点发生故障时,它将自动选择一个备用节点并将其升级为主节点。

- Sentinel还充当客户端发现的中心授权来源,客户端连接到Sentinel以获取主节点的地址。

Redis Sentinel是针对原始Master/Slave模型而衍生的高可用模型。

使用docker内部网络搭建

准备docker-compose.yml

首先,创建一个docker-compose.yml文件,用于定义Redis主从及哨兵服务

networks:

redis-replication:

driver: bridge

ipam:

config:

- subnet: 172.25.0.0/24

services:

# 主

master:

image: redis

container_name: redis-master

restart: always

command: ["redis-server", "/etc/redis.conf"]

ports:

- 6390:6379

volumes:

- "/opt/docker/redis/sentinel_with_network/redis1.conf:/etc/redis.conf"

- "/opt/docker/redis/sentinel_with_network/data1:/data"

networks:

redis-replication:

ipv4_address: 172.25.0.101

# 从1

slave1:

image: redis

container_name: redis-slave1

restart: always

command: ["redis-server", "/etc/redis.conf"]

ports:

- 6391:6379

volumes:

- "/opt/docker/redis/sentinel_with_network/redis2.conf:/etc/redis.conf"

- "/opt/docker/redis/sentinel_with_network/data2:/data"

networks:

redis-replication:

ipv4_address: 172.25.0.102

# 从2

slave2:

image: redis

container_name: redis-slave2

restart: always

command: ["redis-server", "/etc/redis.conf"]

ports:

- 6392:6379

volumes:

- "/opt/docker/redis/sentinel_with_network/redis3.conf:/etc/redis.conf"

- "/opt/docker/redis/sentinel_with_network/data3:/data"

networks:

redis-replication:

ipv4_address: 172.25.0.103

# 哨兵1

sentinel1:

image: redis

container_name: redis-sentinel1

restart: always

ports:

- 26380:26379

command: ["/bin/bash", "-c", "cp /etc/sentinel.conf /sentinel.conf && redis-sentinel /sentinel.conf"]

volumes:

- /opt/docker/redis/sentinel_with_network/sentinel1.conf:/etc/sentinel.conf

networks:

redis-replication:

ipv4_address: 172.25.0.201

# 哨兵2

sentinel2:

image: redis

container_name: redis-sentinel2

restart: always

ports:

- 26381:26379

command: ["/bin/bash", "-c", "cp /etc/sentinel.conf /sentinel.conf && redis-sentinel /sentinel.conf"]

volumes:

- /opt/docker/redis/sentinel_with_network/sentinel2.conf:/etc/sentinel.conf

networks:

redis-replication:

ipv4_address: 172.25.0.202

# 哨兵3

sentinel3:

image: redis

container_name: redis-sentinel3

ports:

- 26382:26379

command: ["/bin/bash", "-c", "cp /etc/sentinel.conf /sentinel.conf && redis-sentinel /sentinel.conf"]

volumes:

- /opt/docker/redis/sentinel_with_network/sentinel3.conf:/etc/sentinel.conf

networks:

redis-replication:

ipv4_address: 172.25.0.203配置redis.conf

- Master

port 6379

pidfile /var/run/redis_6379.pid

protected-mode no

timeout 0

tcp-keepalive 300

loglevel notice

requirepass szz123

masterauth szz123

################################# REPLICATION #################################

slave-announce-ip 192.168.98.138 # 宿主机地址

slave-announce-port 6390 # 宿主机映射端口

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

##################################### RDB #####################################

dbfilename dump.rdb

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dir ./

##################################### AOF #####################################

appendonly yes

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

aof-load-truncated yes

aof-use-rdb-preamble no由于在切换master的时候,原本的master可能变成slave,故也需要在原本的master上配置masterauth

- Slave1

在 REPLICATION 中添加 slaveof 设置即可:

slaveof 172.25.0.101 6379

slave-announce-ip 192.168.98.138 # 宿主机地址

slave-announce-port 6391 # 宿主机映射端口- Slave2

slaveof 172.25.0.101 6379

slave-announce-ip 192.168.98.138 # 宿主机地址

slave-announce-port 6392 # 宿主机映射端口redis1.conf, redis2.conf, redis3.conf

配置sentinel.conf

- sentinel1

# 所有哨兵端口都一致,因为使用 Docker 桥接网络映射

port 26379

# 哨兵设置,所有哨兵皆一致,都指向 Master

sentinel monitor mymaster 192.168.98.138 6390 2

sentinel auth-pass mymaster szz123

sentinel parallel-syncs mymaster 1

sentinel down-after-milliseconds mymaster 30000

sentinel failover-timeout mymaster 180000

# 哨兵之间的通信端口

sentinel announce-ip 192.168.98.138

sentinel announce-port 26380

bind 0.0.0.0

protected-mode no

daemonize no

pidfile /var/run/redis-sentinel.pid

logfile ""

dir /tmp- sentinel2

添加哨兵之间的通信地址和端口

# 哨兵之间的通信端口

sentinel announce-ip 192.168.98.138

sentinel announce-port 26381- sentinel3

sentinel announce-ip 192.168.98.138

sentinel announce-port 26382sentinel1.conf, sentinel2.conf, sentinel3.conf

启动

docker-compose up -d

✔ Container redis-slave2 Started

✔ Container redis-sentinel3 Started

✔ Container redis-sentinel1 Started

✔ Container redis-master Started

✔ Container redis-sentinel2 Started

✔ Container redis-slave1 Started检查主从配置日志

- master

1:M 14 Oct 2024 06:53:17.820 * Synchronization with replica 192.168.98.138:6391 succeeded

1:M 14 Oct 2024 06:53:17.826 * Replica 192.168.98.138:6392 asks for synchronization

1:M 14 Oct 2024 06:53:17.826 * Full resync requested by replica 192.168.98.138:6392

1:M 14 Oct 2024 06:53:17.826 * Starting BGSAVE for SYNC with target: disk

1:M 14 Oct 2024 06:53:17.827 * Background saving started by pid 21

21:C 14 Oct 2024 06:53:17.849 * DB saved on disk

21:C 14 Oct 2024 06:53:17.849 * Fork CoW for RDB: current 0 MB, peak 0 MB, average 0 MB

1:M 14 Oct 2024 06:53:17.922 * Background saving terminated with success

1:M 14 Oct 2024 06:53:17.922 * Synchronization with replica 192.168.98.138:6392 succeeded- slave1、slave2

1:S 14 Oct 2024 06:53:16.764 * Ready to accept connections tcp

1:S 14 Oct 2024 06:53:17.718 * Connecting to MASTER 172.25.0.101:6379

1:S 14 Oct 2024 06:53:17.719 * MASTER <-> REPLICA sync started检查各哨兵是否正常

- sentinel1、sentinel2、sentinel3

1:X 14 Oct 2024 06:53:16.919 * Running mode=sentinel, port=26379.

1:X 14 Oct 2024 06:53:16.948 * Sentinel new configuration saved on disk

1:X 14 Oct 2024 06:53:16.948 * Sentinel ID is 2c6dabfae5332e97de83d53b9ec5856dc86a922b

1:X 14 Oct 2024 06:53:16.948 # +monitor master mymaster 192.168.98.138 6390 quorum 2

1:X 14 Oct 2024 06:53:17.882 * +sentinel sentinel ccf1e3a3bb778b2f0814515c75f0adf0483c467a 192.168.98.138 26381 @ mymaster 192.168.98.138 6390

1:X 14 Oct 2024 06:53:17.922 * Sentinel new configuration saved on disk

1:X 14 Oct 2024 06:53:18.674 * +sentinel sentinel f6865ebad2997c6d6c04f2f7761692e7005472fb 192.168.98.138 26382 @ mymaster 192.168.98.138 6390

1:X 14 Oct 2024 06:53:18.706 * Sentinel new configuration saved on disk

1:X 14 Oct 2024 06:53:26.963 * +slave slave 192.168.98.138:6391 192.168.98.138 6391 @ mymaster 192.168.98.138 6390

1:X 14 Oct 2024 06:53:26.974 * Sentinel new configuration saved on disk

1:X 14 Oct 2024 06:53:26.974 * +slave slave 192.168.98.138:6392 192.168.98.138 6392 @ mymaster 192.168.98.138 6390

1:X 14 Oct 2024 06:53:26.976 * Sentinel new configuration saved on disk可以看到 +monitor 配置、两次 +sentinel 记录和两次 +slave 记录,且各连接的 ip 和 port 信息都正确,此哨兵正常运行。Sentinel2 和 Sentinel3 也是同样的输出

验证哨兵

- Master宕机

docker stop master- 查看sentinel1日志输出

1:X 14 Oct 2024 07:24:43.422 # +sdown master mymaster 192.168.98.138 6390

1:X 14 Oct 2024 07:24:43.524 * Sentinel new configuration saved on disk

1:X 14 Oct 2024 07:24:43.524 # +new-epoch 1

1:X 14 Oct 2024 07:24:43.556 * Sentinel new configuration saved on disk

1:X 14 Oct 2024 07:24:43.556 # +vote-for-leader f6865ebad2997c6d6c04f2f7761692e7005472fb 1

1:X 14 Oct 2024 07:24:43.556 # +odown master mymaster 192.168.98.138 6390 #quorum 2/2

1:X 14 Oct 2024 07:24:43.556 * Next failover delay: I will not start a failover before Mon Oct 14 07:30:44 2024

1:X 14 Oct 2024 07:24:44.663 # +config-update-from sentinel f6865ebad2997c6d6c04f2f7761692e7005472fb 192.168.98.138 26382 @ mymaster 192.168.98.138 6390

1:X 14 Oct 2024 07:24:44.663 # +switch-master mymaster 192.168.98.138 6390 192.168.98.138 6391

1:X 14 Oct 2024 07:24:44.663 * +slave slave 192.168.98.138:6392 192.168.98.138 6392 @ mymaster 192.168.98.138 6391

1:X 14 Oct 2024 07:24:44.663 * +slave slave 192.168.98.138:6390 192.168.98.138 6390 @ mymaster 192.168.98.138 6391

1:X 14 Oct 2024 07:24:44.711 * Sentinel new configuration saved on disk

1:X 14 Oct 2024 07:25:14.669 # +sdown slave 192.168.98.138:6390 192.168.98.138 6390 @ mymaster 192.168.98.138 6391-

哨兵 sdown 主观判断 Master 失活,接着所有哨兵 odown 客观判断 Master 失活。此时 +new-epoch 1 进入第一轮选举。

-

接着 +selected-slave slave 172.25.0.102:6379 选举出 102,即 Slave2 作为新的 Master,再 +promoted-slave 推举 Slave2 为新的 Master。

-

最终依旧会去看 101 是否回来了,+sdown slave 172.25.0.101:6379 172.25.0.101 6379 @ mymaster 172.25.0.102 6379 发现 101 还没上线。

172.25.0.102\172.25.0.101均为容器内部地址,日志为显示的地址为宿主机地址

-

登录slave2查看信息

docker exec redis-slave2 redis-cli -a szz123 info replication

# Replication

role:master

connected_slaves:1

slave0:ip=192.168.98.138,port=6391,state=online,offset=24833,lag=1

master_failover_state:no-failover

master_replid:c6114e5d2f0cbd340017a1b7034d758e22610683

master_replid2:30dc1cc7fb7f4a06524aa4d1df3228e550b30f0e

master_repl_offset:24833

second_repl_offset:5666

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:24833从结果上看,确实已经变成master了,且有一个slave

- 原master重新上线

1:X 14 Oct 2024 07:37:00.163 # -sdown slave 192.168.98.138:6390 192.168.98.138 6390 @ mymaster 192.168.98.138 6392

1:X 14 Oct 2024 07:37:10.146 * +convert-to-slave slave 192.168.98.138:6390 192.168.98.138 6390 @ mymaster 192.168.98.138 6392发现 -sdown slave 192.168.98.138:6390,即把 Slave(原 Master)的 down 状态取消掉。

- 再次查看slave2

# Replication

role:master

connected_slaves:2已经有了两个slave了